Code your own Idealo price machine

on Sun Jan 12 2020 by Ingo Hinterding

This python script checks websites for product prices and sends an email if a desired price point is met.

Learning new technology can be extremely dull, therefore I try to find an interesting project instead of following the twentiest “Create a todo list app” tutorial. This is my little project I used to dive into Python (again). It is based on a great tutorial from Dev Ed: https://www.youtube.com/watch?v=Bg9r_yLk7VY

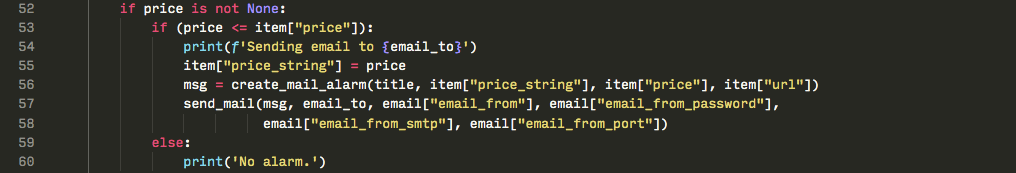

The functionality is simple: the script loads in a website (currently from either Amazon or the Nintendo Online Store), looks for the price on that site (e.g. for a Switch Game) and if that price is equal or lower to a user defined threshold, it sends out an email with the price and link to the product.

Now I will be informed whenever a product or game I’m interested in is on sale! \o/

Here’s a terminal output showing how pyscraper checks for various products on Amazon and Nintendo:

I’m using Beautiful Soup and the requests lib from Python to fetch a website and parse the content. Python makes it really easy and fun to work with online data, no wonder it is used in so many scientific & data science projects.

Getting the correct price from the Amazon website was straight forward, as the prices has a unique CSS identifier that can be searched for. The only challenge here was to check for multiple CSS IDs, as a product can be on sale, discounted, etc.

price_strings = {"priceblock_ourprice", "priceblock_dealprice", "priceblock_saleprice"}Also I realized that Amazon will do a “Are you a robot?” check after various similar requests (which actually makes sense as this is a price bot), but I got away for cheap by slightly modifiying the request header each time:

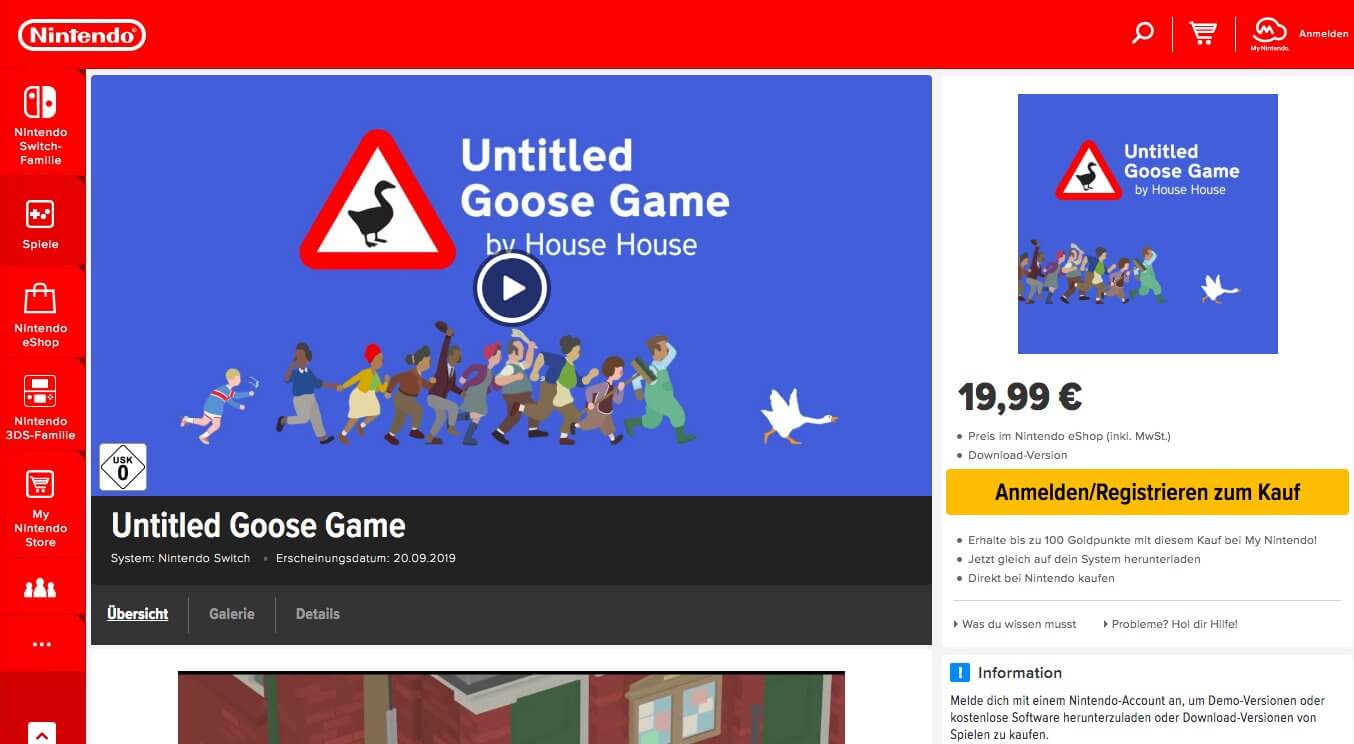

headers = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.' + str(random.randint(0, 1000))}For the Nintendo website my approach is a bit different. It was a real head scratcher until I realized that the reason I never got any prices off the page is that they are retrieved asynchronously via Ajax. BeautifulSoup does not wait for those data being fetched and fails to find the price.

To make it work I checked out the network traffic with the Chrome Developer tools and identified the Ajax call. The result object contains all the information I want: https://api.ec.nintendo.com/v1/price?country=DE&lang=de&ids=70010000014140

{"personalized":false,"country":"DE","prices":[{"title_id":70010000014140,"sales_status":"onsale","regular_price":{"amount":"19,99 €","currency":"EUR","raw_value":"19.99"}}]}

I could call the URL above directly to fetch the price, but I wanted the script to be super convenient, so it should only require the website URL of the game, not the Ajax call. I examined the website javascript and found the game ID there, which is 70010000014140. My regex skills are quite poor and I failed to find out how to search for javascript code with BeautifulSoup, so my way to retrive that game ID is undoubtly hacky:

nsuid_pos = str(page.content).find("offdeviceNsuID")+18

game_id = str(page.content)[nsuid_pos:nsuid_pos+14]Not a beauty, but it works.

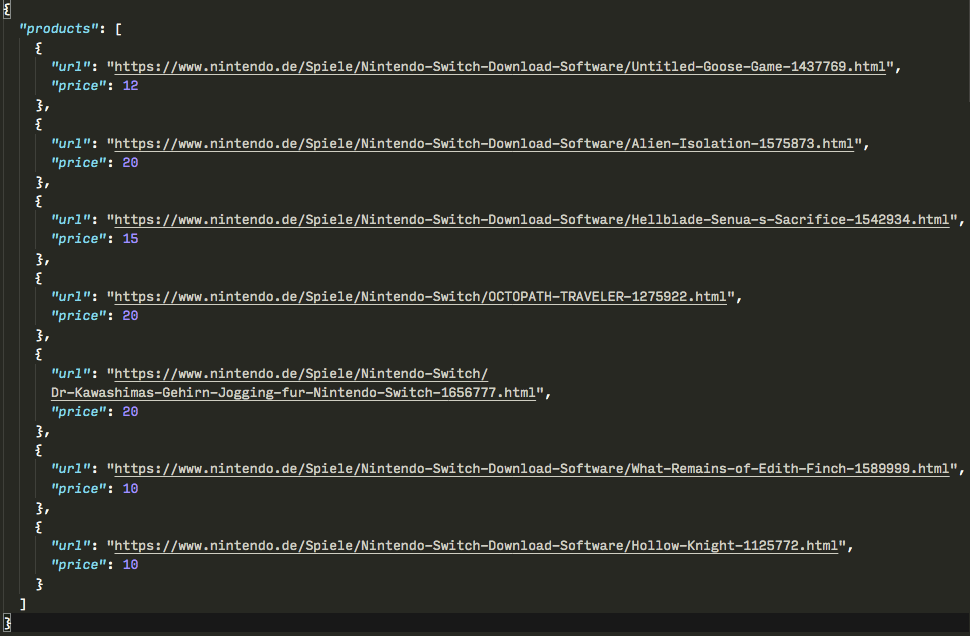

The product links are stored in JSON format:

I installed the script on my Raspberry Pi4 by adding a line to the crontab

55 14 * * * /usr/bin/python3 /home/pi/code/scraper/scraper.py >> /home/pi/code/scraper/log.txtInstead of printing out the results, every output gets piped into a logfile so that I can troubleshoot the script if anything went wrong. As a side effect I have now – finally – a reason to keep the Raspberry Pi running all the time…

All the code and more documentation on how to setup your emails and configure pyscraper can be found on GitHub: https://github.com/Esshahn/pyscraper

❮❮ back to overview