You are likely to be eaten by a grue.

Text adventures are a form of interactive fiction. You type commands like “examine sword” or “go north” to explore a world and solve puzzles. They’re heavily inspired by Choose Your Own Adventure books and, of course, classic pen-and-paper roleplaying games.

Some of the earliest text adventures like Colossal Cave Adventure (1976), Adventureland (1978), and Dungeon were played on mainframe computers like the PDP-10, usually hidden away in the computer labs of universities such as Stanford or MIT.

One of the most influential titles was Zork, originally developed in 1977–79 by Tim Anderson, Marc Blank, Bruce Daniels, and Dave Lebling at MIT’s Dynamic Modeling Group. Zork dropped players into the “Great Underground Empire,” a mysterious, monster-ridden world filled with puzzles, treasures, and very few signposts. After gaining popularity as a mainframe game, it was later ported to home computers like the Apple II and Commodore 64 and sold by Infocom, becoming one of the best-selling text adventures of all time.

I always wanted to visit the world of Zork, to explore its dungeons and dangers, collect treasures, and solve ancient puzzles. More than anything, I wanted to experience this iconic piece of computing history for myself. I’ve tried several times, only to admit, somewhat bitterly, that I absolutely suck at playing text adventures. I can’t keep track of locations, I get distracted, and frankly, I lack the patience to properly immerse myself.

Still, I find them fascinating. There’s something magical about a world that exists entirely in your mind. When my son was younger, I used to read him stories, and we’d often twist the narrative, inventing characters and silly plot turns as we went. I love the idea of making text adventures more accessible to kids as a space for imagination and exploration.

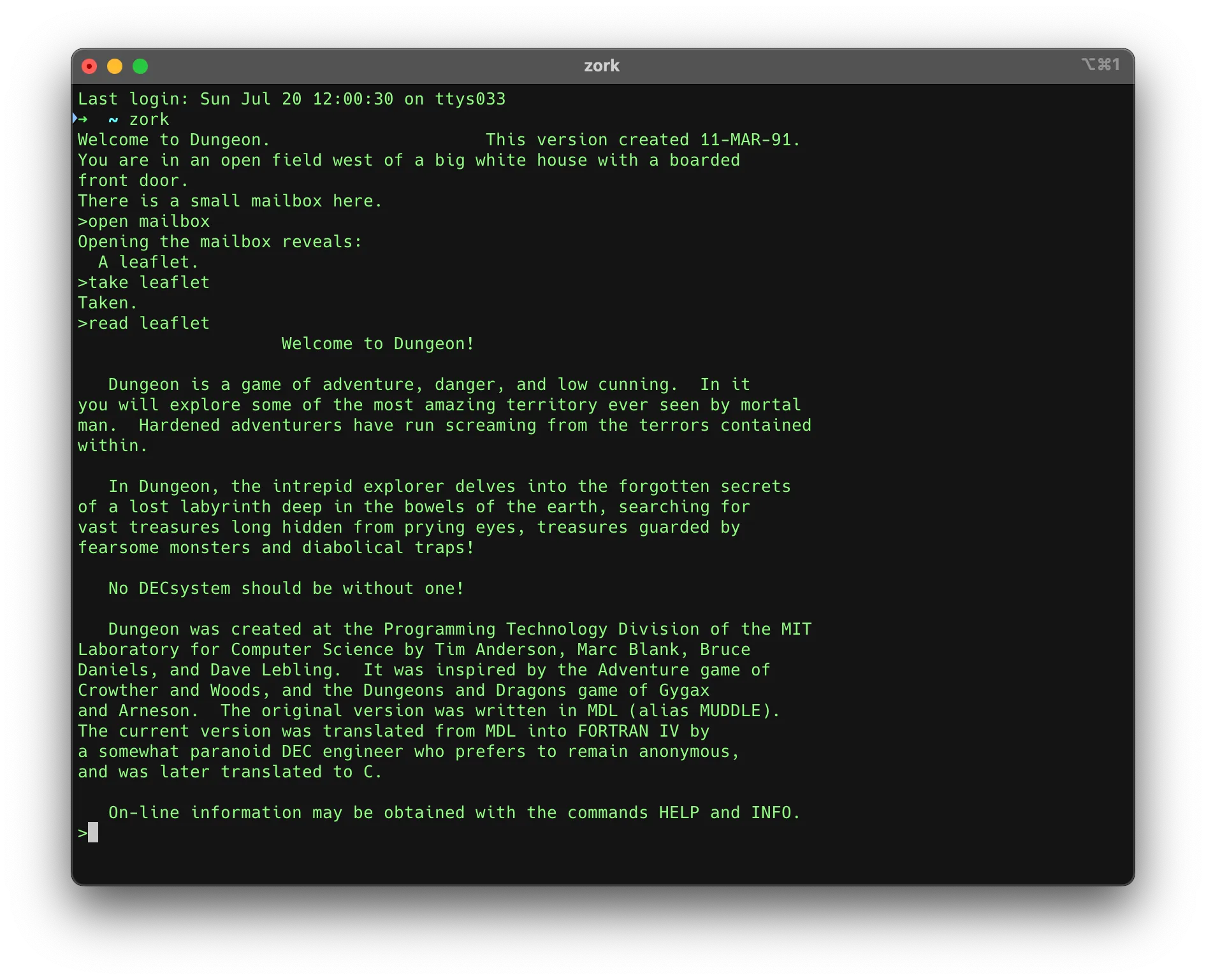

Zork running inside the terminal.

Zork running inside the terminal.

But a different idea recently struck me: now that AI has gotten so advanced, could it play a text adventure on its own? Could it read descriptions, understand context, navigate the world, manipulate objects, and actually solve puzzles? With modern context windows expanding faster than my todo list, could an AI keep the entire game state in memory and maybe even win?

Spoiler alert: it can’t. Not yet, anyway. But it was a fascinating experiment, and a surprisingly useful one. Trying to get a language model to play Zork turns out to be a pretty good way to test its reasoning, memory, and world interaction skills. Does it understand the task? Can it track its progress? Can it improvise? Can it actually finish the game?

Our AI adventurer is making friends.

Our AI adventurer is making friends.

Proof of Concept 1: Human in the Loop

I like to start every project with a proof of concept that tests the core idea with as little effort as possible. In this case, the question was simple: can an AI even grasp the concept of a text adventure, let alone play one? For this first experiment, I used ChatGPT.

I launched the game in my terminal and took on the role of a human API, manually copying the game’s output into the chat and pasting the AI’s responses back into the game. The conversation unfolded like this, with ChatGPT playing the role of the Clueless Adventurer and me as the ever-patient Dungeon Master:

I will post you the output of a text adventure. It is your objective to play and solve the game. Like with every text adventure, clues are within the given text and you can enter commands to get more information about the environment or move to a different location. Your responses will ONLY be the commands you decide to give, nothing else. Starting now. The game is called Zork. This is the first output: You are in an open field west of a big white house with a boarded front door. There is a small mailbox here.

> _open mailbox

(wow… it got the command right on first try). Opening the mailbox reveals: A leaflet.

> _take leaflet

Taken.

> _read leaflet

Dungeon is a game of adventure, danger, and low cunning. In it you will explore some of the most amazing territory ever seen by mortal man. Hardened adventurers have run screaming from the terrors contained within. In Dungeon, the intrepid explorer delves into the forgotten secrets of a lost labyrinth deep in the bowels of the earth, searching for vast treasures long hidden from prying eyes, treasures guarded by fearsome monsters and diabolical traps! No DECsystem should be without one! Dungeon was created at the Programming Technology Division of the MIT Laboratory for Computer Science by Tim Anderson, Marc Blank, Bruce Daniels, and Dave Lebling. It was inspired by the Adventure game of Crowther and Woods, and the Dungeons and Dragons game of Gygax and Arneson. The original version was written in MDL (alias MUDDLE). The current version was translated from MDL into FORTRAN IV by a somewhat paranoid DEC engineer who prefers to remain anonymous, and was later translated to C. On-line information may be obtained with the commands HELP and INFO.

> _look around

I don’t understand that.

> _look

You are in an open field west of a big white house with a boarded front door. There is a small mailbox here.

> _go north

You are facing the north side of a white house. There is no door here, and all the windows are barred.

> _Proof of Concept 1 Result: Success!

The conversation continued for a while until I eventually stopped the test. The AI was able to navigate the game and interact with the world and its objects. In this short conversation we could validate the following:

This felt like a small but exceptional achievement. I was genuinely impressed. For the next few minutes, the LLM kept making steady progress, navigating the eerie world of Zork like a slightly confused but determined adventurer.

Proof of Concept 2: Vibe Coding

It’s always tempting to jump straight from proof of concept to building the “real thing.” And that’s exactly what I did. In hindsight, I probably should have continued testing the AI via the manual approach first, just to be sure it could actually finish the game. But I was eager to get into vibe coding mode, so impatience won. The next step was obvious: remove the human-in-the-loop (me) and let the LLM interact with the game directly.

Since ChatGPT had handled the first test so well, I asked it for one final favor. I prompted it to write a system prompt — a kind of instruction manual — for other LLMs to follow its example and behave accordingly.

The system prompt:

You are playing a classic text adventure game like Zork. Your objective is to explore, solve puzzles, and complete the adventure by issuing valid game commands.

Rules:

1. Respond ONLY with a SINGLE game command each turn. Do NOT include explanations, reasoning, or multiple commands.

2. Commands should be concise, typically one or two words, using standard adventure verbs (e.g., north, take lamp, open door, examine object).

3. Always consider the game’s last output carefully to understand your surroundings and what actions are possible.

4. Avoid repeating identical commands that have already succeeded or failed; instead, try new logical actions or explore new areas.

5. If unsure, prioritize commands that gather information, such as "look", "examine [object]", or "inventory".

6. Use directions (north, south, east, west, up, down) to navigate through the environment.

7. When stuck, try interacting with objects in the environment or using items in your inventory.

8. Remember the goal: exploration, puzzle solving, and advancing the story.

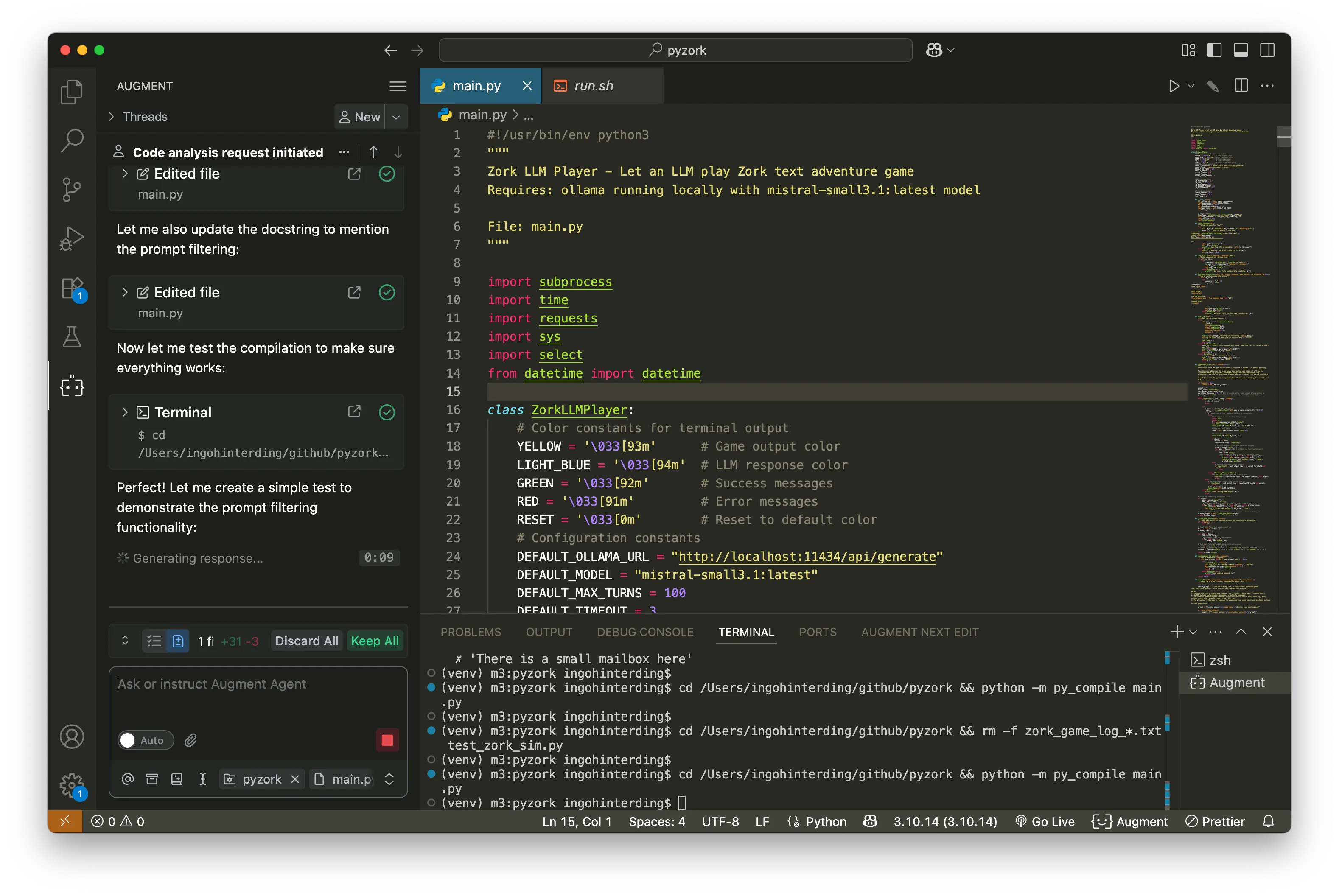

I usually start with Claude when drafting the initial code. For whatever reason, I tend to get the best results from this model during the early stages of development. Once I hit my daily quota, I switch over to VSCode and continue working with Augment as my AI coding agent. My first prompt is typically aimed at getting a high-level understanding of the concept and a rough sketch of the implementation:

I have a program running inside my mac terminal.

It is a text adventure which takes commands from the user and outputs the results of the instruction.

I want to create a script which let's a llm interact with the program and solve the text adventure.

What is the best technical approach to achieve this?

Claude suggested a python project with some of the usual libraries and a bash script, which confirmed what I had in mind, so I went with it:

create that script for me. some information

* use ollama API

* model name is mistral-small3.1:latest

* name of the terminal app is zork (typing "zork" into the terminal starts the game)

* create the requirements.txt file needed

* create a bash script which activates the virtual environment venv before launching the python script which calls main.py

* store the conversation in a log file

Interestingly, after fixing just a couple of small errors, the script was able to run and interact with the game. Pretty exciting! But with each new iteration, the code grew more and more complex, a classic side effect of vibe coding without stopping to refactor along the way. Fixing one issue would often break something else. Still, the core functionality held together, and I could actually watch the AI play the game. \o/

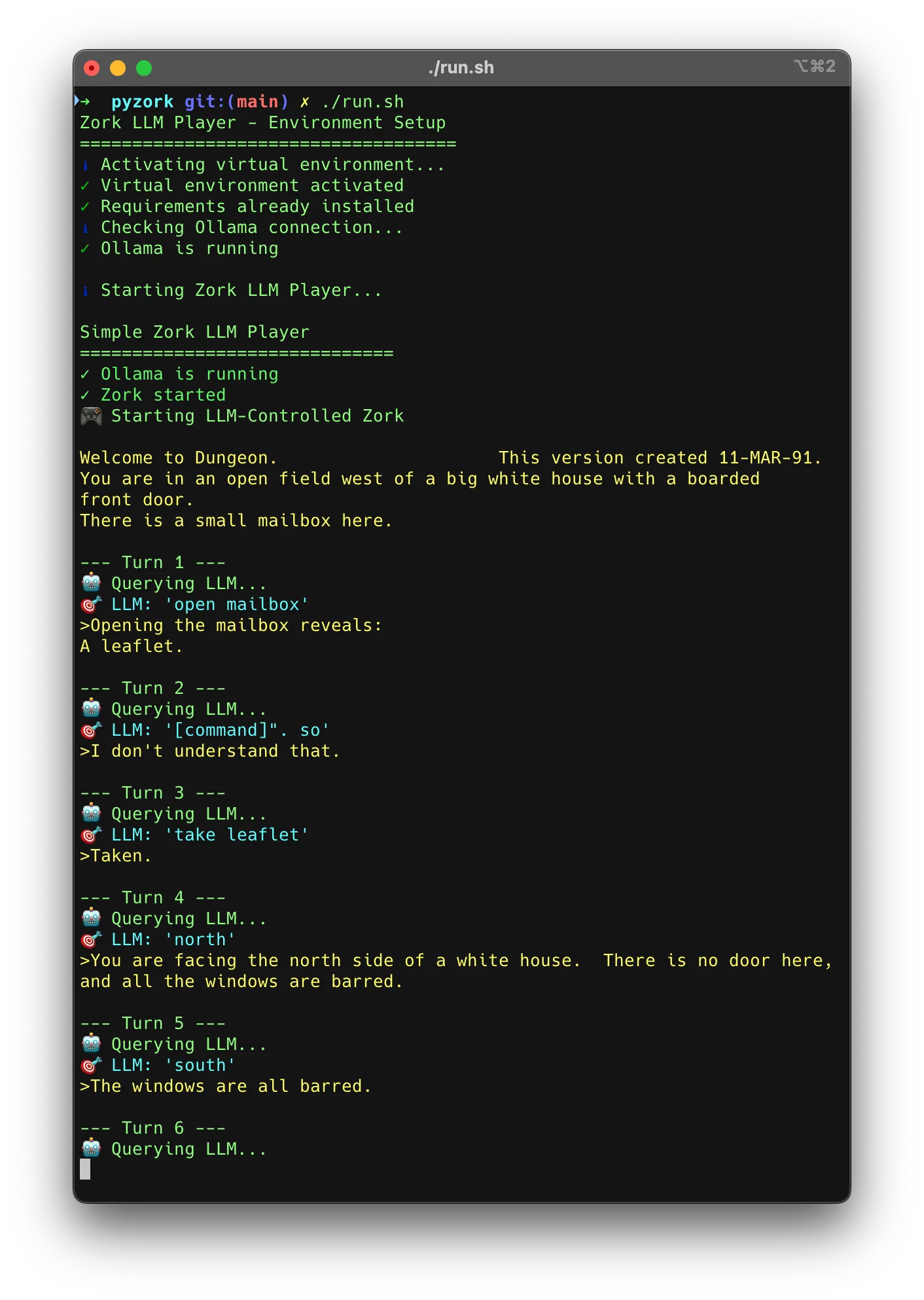

One of the better runs.

One of the better runs.

The full transcript of one of the better runs:

--- Turn 1 ---

🎯 LLM: 'open mailbox'

>Opening the mailbox reveals:

A leaflet.

--- Turn 2 ---

🎯 LLM: '[command]". so'

>I don't understand that.

--- Turn 3 ---

🎯 LLM: 'take leaflet'

>Taken.

--- Turn 4 ---

🎯 LLM: 'north'

>You are facing the north side of a white house. There is no door here,

and all the windows are barred.

--- Turn 5 ---

🎯 LLM: 'south'

>The windows are all barred.

--- Turn 6 ---

🎯 LLM: 'east'

>You are behind the white house. In one corner of the house

there is a window which is slightly ajar.

--- Turn 7 ---

🎯 LLM: 'go through'

>Huh?

--- Turn 8 ---

🎯 LLM: 'enter the'

>The window is closed.

--- Turn 9 ---

🎯 LLM: 'open window'

>With great effort, you open the window far enough to allow passage.

--- Turn 10 ---

🎯 LLM: 'go through'

>Huh?

--- Turn 11 ---

🎯 LLM: '<think>'

>I don't understand that.

--- Turn 12 ---

🎯 LLM: 'enter the'

>You are in the kitchen of the white house. A table seems to have

been used recently for the preparation of food. A passage leads to

the west, and a dark staircase can be seen leading upward. To the

east is a small window which is open.

On the table is an elongated brown sack, smelling of hot peppers.

A clear glass bottle is here.

The glass bottle contains:

A quantity of water.

--- Turn 13 ---

🎯 LLM: 'take sack'

>Taken.

--- Turn 14 ---

🎯 LLM: 'open sack'

>Opening the brown sack reveals:

A clove of garlic.

A lunch.

--- Turn 15 ---

🎯 LLM: '<think>'

>I don't understand that.

--- Turn 16 ---

🎯 LLM: 'look around'

>I don't understand that.

--- Turn 17 ---

🎯 LLM: 'take glass'

>Huh?

--- Turn 18 ---

🎯 LLM: 'take bottle'

>Taken.

--- Turn 19 ---

🎯 LLM: 'go west'

>You are in the living room. There is a door to the east. To the west

is a wooden door with strange gothic lettering, which appears to be

nailed shut.

In the center of the room is a large oriental rug.

There is a trophy case here.

On hooks above the mantlepiece hangs an elvish sword of great antiquity.

A battery-powered brass lantern is on the trophy case.

There is an issue of US NEWS & DUNGEON REPORT dated 11-MAR-91 here.

--- Turn 20 ---

🎯 LLM: '<think>'

>I don't understand that.

--- Turn 21 ---

🎯 LLM: '<think>'

>I don't understand that.

--- Turn 22 ---

🎯 LLM: 'open trophy'

>Huh?

--- Turn 23 ---

🎯 LLM: 'open trophy'

>Huh?

--- Turn 24 ---

🎯 LLM: 'open trophy'

>Huh?

--- Turn 25 ---

🎯 LLM: 'open trophy'

>Huh?

^C

Game interrupted by user

I got the best results with Qwen 3:8b, a reasoning-focused model. Most others failed right out of the gate. Mistral 3.1 small came close in terms of quality, but the inference time on my MacBook made it feel like playing Zork via carrier pigeon.

After countless test runs, I had to admit that none of the models managed to progress beyond the very first tasks: opening the mailbox, reading the leaflet, walking into the kitchen. Still, the fact that they got that far suggests that, in principle, it is possible to use an LLM to play a text adventure.

Of course, thanks to my reckless commitment to vibe coding, the codebase had become a glorious mess. Here’s one example, a function that ballooned with each iteration and probably shouldn’t have existed in the first place:

def extract_command_from_thinking(self, response):

"""Extract final command from thinking model response"""

if not response:

return "look"

import re

# Strategy 1: Look for FINAL_COMMAND: marker

final_command_match = re.search(r'FINAL_COMMAND:\s*([^\n]+)', response, re.IGNORECASE)

if final_command_match:

command = final_command_match.group(1).strip()

words = command.lower().split()[:2]

return " ".join(words) if words else "look"

# Strategy 2: Look for content after </think> tag

think_end_match = re.search(r'</think>\s*(.+)', response, re.IGNORECASE | re.DOTALL)

if think_end_match:

after_think = think_end_match.group(1).strip()

# Take first meaningful line after thinking

lines = after_think.split('\n')

for line in lines:

line = line.strip()

if line and not line.startswith(('I', 'The', 'Based', 'Looking', 'Given', 'So')):

words = line.lower().split()[:2]

if words and len(words[0]) > 1:

return " ".join(words)

# Strategy 3: Look for commands in the last part of the response

# Split by sentences and look for command-like patterns

sentences = re.split(r'[.!?]\s+', response)

for sentence in reversed(sentences[-3:]): # Check last 3 sentences

sentence = sentence.strip()

if sentence:

# Look for patterns like "I should X" or "Let me X" or just "X"

command_patterns = [

r'(?:i should|let me|i\'ll|i will)\s+([a-z\s]+)',

r'(?:try|do|use)\s+([a-z\s]+)',

r'^([a-z]+(?:\s+[a-z]+)?)$' # Simple command at start of sentence

]

for pattern in command_patterns:

match = re.search(pattern, sentence.lower())

if match:

command = match.group(1).strip()

words = command.split()[:2]

if words and words[0] in ['north', 'south', 'east', 'west', 'up', 'down', 'look', 'examine', 'take', 'open', 'close', 'read', 'inventory', 'drop', 'go', 'enter']:

return " ".join(words)

# Strategy 4: Remove thinking tags and extract from remaining text

clean_response = re.sub(r'<think>.*?</think>', '', response, flags=re.DOTALL | re.IGNORECASE)

clean_response = clean_response.strip()

if clean_response:

lines = clean_response.split('\n')

for line in lines:

line = line.strip()

if line and not line.startswith(('I', 'The', 'Based', 'Looking', 'Given')):

words = line.lower().split()[:2]

if words and len(words[0]) > 1:

return " ".join(words)

# Strategy 5: Look for common Zork actions in the response

game_commands = [

'open mailbox', 'take leaflet', 'read leaflet', 'inventory', 'examine mailbox',

'north', 'south', 'east', 'west', 'up', 'down', 'look', 'examine', 'take',

'open', 'close', 'read', 'drop', 'go', 'enter'

]

response_lower = response.lower()

for cmd in game_commands:

if cmd in response_lower:

return cmd

# Strategy 6: Handle incomplete responses (very common with long thinking)

incomplete_endings = ('<think', '<think>', 'if', 'But', 'However', 'maybe', 'according', 'Let', 'Alternatively', 'should', 'try', 'go', 'the')

if response.rstrip().endswith(incomplete_endings):

print("🔍 Detected incomplete response - using smart fallback")

# Analyze the thinking content for action hints

response_lower = response.lower()

# Check for specific action mentions in the thinking

if 'go east' in response_lower or 'try east' in response_lower:

return "east"

elif 'go west' in response_lower or 'try west' in response_lower:

return "west"

elif 'go north' in response_lower or 'try north' in response_lower:

return "north"

elif 'go south' in response_lower or 'try south' in response_lower:

return "south"

elif 'inventory' in response_lower:

return "inventory"

elif 'look' in response_lower:

return "look"

elif 'mailbox' in response_lower and 'open' in response_lower:

return "open mailbox"

elif 'leaflet' in response_lower and 'take' in response_lower:

return "take leaflet"

elif 'read' in response_lower and 'leaflet' in response_lower:

return "read leaflet"

else:

# Default exploration pattern

return "north"

return "look" # Ultimate fallback

What a mess.

At 700+ lines of code, I quit the vibing.

At 700+ lines of code, I quit the vibing.

At one point, I stripped out all the LLM-specific logic and focused purely on user input. The result was a cleaner script with about 170 lines in total. But the moment I started reintroducing the LLM code, the complexity crept back in and the codebase ballooned once again. That’s when I knew: it was time to call an end to the vibe coding experiment.

Proof of Concept 2 Result: Partial Success

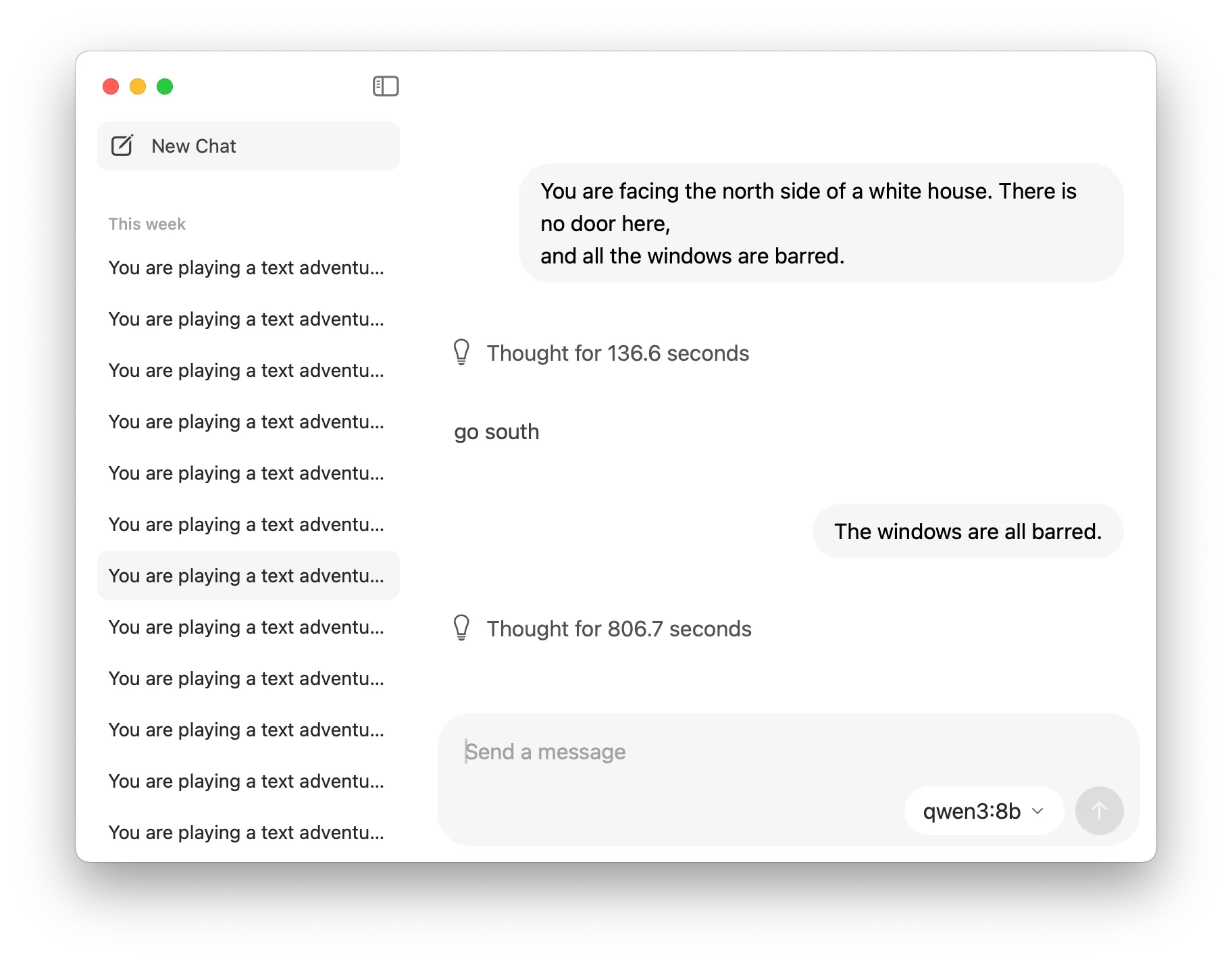

Proof of Concept 3: Local LLM in Ollama

I started to wonder: was ChatGPT actually that much better than local LLMs, or was my initial game session just a superficial fluke? (Spoiler: it was the latter.) Around the same time, Ollama released an update that finally added a simple UI for interacting with local models, previously, it was command-line only. So I decided to revisit the experiment using the same local models I’d tested earlier during the vibe coding phase. (If you’re curious about my tests with different local models, check out this post: 🇩🇪 Germany’s next top model.)

- gemma3:12b

- qwen3:8b

- qwen3:14b

- deepseek-r1:14b

- mistral-small3.1:24b

- llama3.1:8b

- gpt-oss:20b

Ollama’s new UI.

Ollama’s new UI.

This time, I ignored whether the model’s output strictly followed the command syntax expected by the game parser. Even when it added its own thoughts or internal reasoning, I let it slide. My main focus was whether the model could actually make progress in the game. I also chose to overlook the inference time, which occasionally stretched to ten minutes or more.

More importantly, I replaced the initial system prompt with a structured set of rules to guide the model’s behavior. I also included a comprehensive list of commands available in Zork (sourced from a fan website), hoping the model would learn to use them effectively.

The rule.txt file:

You are playing a text adventure game.

Your objective is to progress in the story and solve the quests and tasks.

The text adventure can be played by entering word combinations the text parser understands.

NEVER output anything other than the command (e.g. only output "open mailbox" instead of "The player should ask the game to open the mailbox next").

Do not think too long and always respond with a command.

When you are ready to start the game, write "I'm ready".

# General Approach

1. Always analyze the text from the game, it usually contains valuable information about how to progress, e.g. descriptions of the current location, directions to go, items which can be picked up and examined and so on.

2. Always try to pick up whatever is available and examine the object for new clues.

3. Only change the location when you do not have any other options in the current one.

4. Do not repeat the same actions, e.g. reading the same letter again and again.

5. Keep an inventory of your objects, they may be used in a location to progress, e.g. a ladder may later be used to access a roof.

6. Commands are usually one or two words, e.g. "open mailbox".

7. Never output multiple commands.

# Example

When you enter a new location:

- look around ("look")

- examine interesting objects from the given description

- if possible, pick up objects (e.g. "take ladder"), sometimes ("take all") works, too

- analyze if any object from your inventory is suitable to solve a task in the current location (e.g. "use ladder")

# List of common verbs the parser understands:

This list is incomplete, other commands can be tried, too.

Example commands:

north

go north

take leaflet

read leaflet

Movement Commands

north (move north.) south (move south.) east (move east.) west (move west.) northeast (move northeast.) northwest (move northwest.) southeast (move southeast.) southwest (move southwest.) up (move up.) down (move down.) look (looks around at current location.) save (save state to a file.) restore (restores a saved state.) restart (restarts the game.) verbose (gives full description after each command.) score (displays score and ranking.) diagnose (give description of health.) brief (give a description upon first entering an area.) superbrief (never describe an area.) quit (quit game.) climb (climbs up.) g (redo last command.) go (direction) (go towards direction (west/east/north/south/in/out/into).) enter (in to the place (window, etc.).) in (go into something (e.g. window).) out (go out of the place (e.g. kitchen).) hi / hello (say hello...)

Item Commands

get / take / grab (item) (removes item from current room; places it in your inventory.) get / take / grab all (takes all takeable objects in room.) throw (item) at (location) (throws the item at something.) open (container) (opens the container, whether it is in the room or your inventory.) open (exit) (opens the exit for travel.) read (item) (reads what is written on readable item.) drop (item) (removes item from inventory; places it in current room.) put (item) in (container) (removes item from inventory; places it in container.) turn (control) with (item) (attempts to operate the control with the item.) turn on / turn off (item) (turns the item on / off.) move (object) (moves a large object that cannot be picked up.) attack (creature) with (item) (attacks creature with the item.) examine (object) (examines, or looks, at an object or item or location.) inventory / i (shows contents of the inventory.) eat (eats item (specifically food).) shout / yell / scream (aaarrrrgggghhhh!) close [Door] (closes door.) tie (item) to (object) (ties item to object.) pick (item) (take / get item.) kill self with (weapon) (humorously commits suicide.) break (item) with (item) (breaks item.) kill (creature) with (item) (attacks creature with the item.) pray (when you are in temples...) drink (drink an item.) smell (smell an item.) cut (object/item) with (weapon) (strange concept, cutting the object/item. If object/item = self then you commit suicide.) bar (bar bar...) listen (target) (listens to a creature or an item.)

DeepSeek managed to read the leaflet and wander around a bit, but eventually got stuck in a loop. Being a reasoning model, it spent about 1600 tokens on average thinking, only to come up with the same “go east” command it had chosen on the previous turns.

Mistral 3.1 did not even respond in the UI, most likely because my MacBook did not have enough RAM to handle the 24 billion parameter model. I will have to repeat my tests on a more powerful machine, as this model is quite capable and used in some of our projects at CityLAB Berlin.

qwen3:8b tried to take the mailbox, then read the mailbox, and then opened it, only to get lost wandering in random directions afterward. Poor qwen, it must have been shocked by what it found inside the mailbox.

llama3.1:8b had a promising start: it read the leaflet and found the slightly open window. However, it tried to take objects that were not there. After realizing that it was only successful with the mailbox, it kept trying to take the mailbox repeatedly — about a hundred times.

mistral-nemo attempted to take a key as its first action, which was not present, and then gave up, falling into an infinite loop.

gemma3:12b repeatedly tried to take the mailbox, looked around, then tried to take the mailbox again, looked around, and repeated this cycle endlessly.

Well, that was not very successful.

Slightly disappointed, I tried ChatGPT again. Unfortunately, as of August 2025, OpenAI does not clearly show anymore which model is being used for a conversation. Since I had used it heavily earlier that day, I was apparently downgraded to a less capable version. Unlike in my first experiment, it did not perform any better than the local models this time.

Update August 6: Today, OpenAI’s first open weight model dropped out of nowhere, and of course I had to give it a try. The model is called gpt-oss and comes in 20 and 120 billion parameter sizes. I tested the smaller version. Like the other models, it neither solved the game nor made meaningful progress, but it performed best among the competition. It took a different path through the game and wandered into the forest. It discovered the locked grate under the pile of leaves and found the big tree. It even reached the correct conclusion to “climb [the] tree,” unfortunately only after it had already moved to a different location.

The model attempted to call an “examine leaves” assistant tool once (which freezes the UI) and had to “look” about every other turn. However, it also asked the game for “help,” which provided useful information and allowed the LLM to make some progress.

gpt-oss feels like the first model that makes me consider adapting the rules file and iterating on the testing setup. It shows real potential.

Update August 8: Another day, another milestone release from OpenAI, this time with the long-awaited gpt-5. To cut it short, since this article is already quite long: gpt-5 performed notably better than gpt-oss. It read the leaflet, climbed a tree in the forest, found the jewel-encrusted egg, climbed down, and discovered the grating hidden under the pile of leaves. It then tried to open it using items from its inventory. About every second command was actually useful (though it did a little too much looking around). Ultimately, after around 30 turns, it got stuck and lost its progress. But overall, this is a big step in the right direction.

Proof of Concept 3 Result: Failed

This concluded my LLM tests for the time being. While no model was able to make significant progress in the game, it was clear that we are getting close. I have no doubt that very soon this will be possible.

This left me with a final question: Can I solve the game myself?

Proof of Concept 4: Playing the game myself (finally)

Equipped with a glass of red wine and some suspenseful adventure music playing in the background, I was ready to enter the world of Zork myself.

I read the leaflet, walked around the old house, entered through the window, and explored the rooms. Hidden under an old rug was a trapdoor leading down into the cellar. Not long after, I met the first troll, which I swiftly defeated with my little glow-in-the-dark sword. Things were going great.

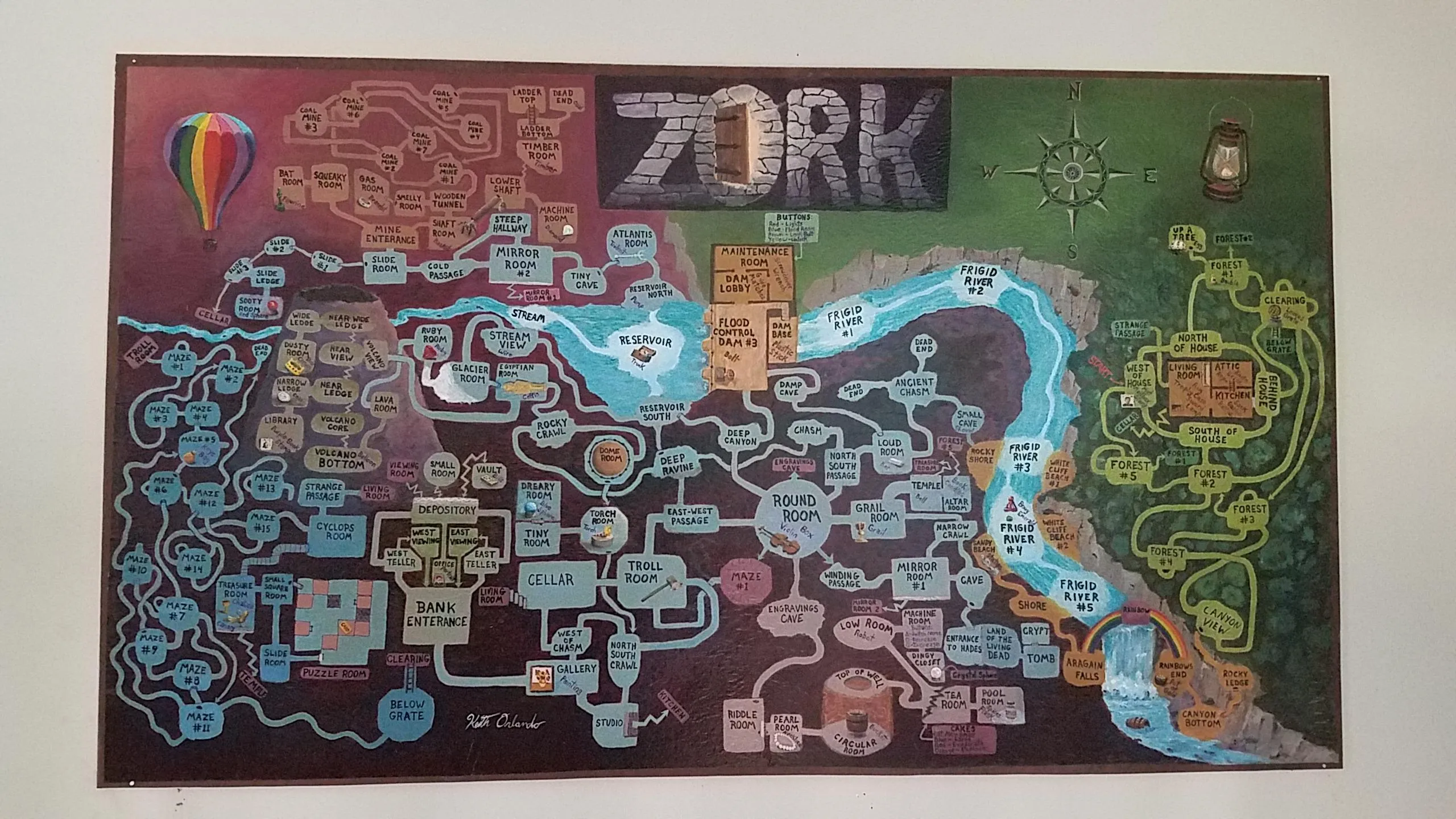

A map of the Zork world.

A map of the Zork world.

Well, until I realized that my assumption about directions in the game was incorrect. Sometimes going west from room A to room B, then going east from room B to room A, did not bring me back to the expected location. I quickly got lost. I searched the web for answers and found, besides the wonderfully drawn map above created by reddit user ion_bond, confirmation that the game does not always provide logical directions. The map solves this beautifully by showing winding paths.

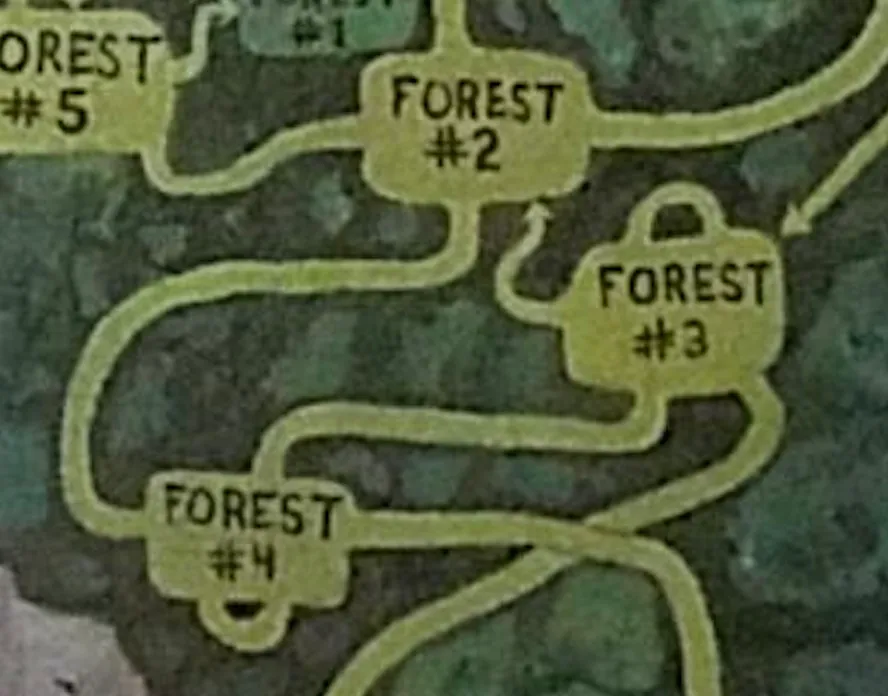

See this example:

It’s easy to get lost in Zork.

It’s easy to get lost in Zork.

Starting in Forest #2, you go south to Forest #4. If you then go back north, you end up in Forest #3 instead of Forest #2, which would now be to the east. Eventually, the wine ran out and I had to call it quits. I did not manage to solve the game, mostly due to my lack of patience, to be honest. I found the narrative writing less enjoyable than I had hoped. Some locations were described in great detail, while others were, quite frankly, a bit dull and unimaginative.

Proof of Concept 4 Result: Failed

Conclusion

While I did not succeed with my initial idea of letting an AI play and eventually solve a text adventure game on its own, I am quite pleased with how far I got. I am convinced that within the next few months, this will be possible, and I will definitely revisit this topic to benchmark new models against each other.

As with every project, I learned a lot along the way and came up with iterations of the idea, as well as completely new ones:

Thank you for reading. I hope you enjoyed following my exploration both in the game and the LLM space. I would be happy to hear your thoughts and ideas on this topic. Feel free to comment below if you want to share your perspective.